Why Architecture Teams Must Control Variability Before Scaling

In large-scale deployments, hardware failures rarely come from dramatic design flaws.

They come from something far more subtle — uncontrolled variability.

Architecture teams often talk about deterministic systems: predictable performance, stable behavior, repeatable deployments.

But many overlook a fundamental truth:

You cannot build deterministic hardware on top of non-deterministic components.

At scale, predictability is not an outcome — it is a discipline that must start at the component level.

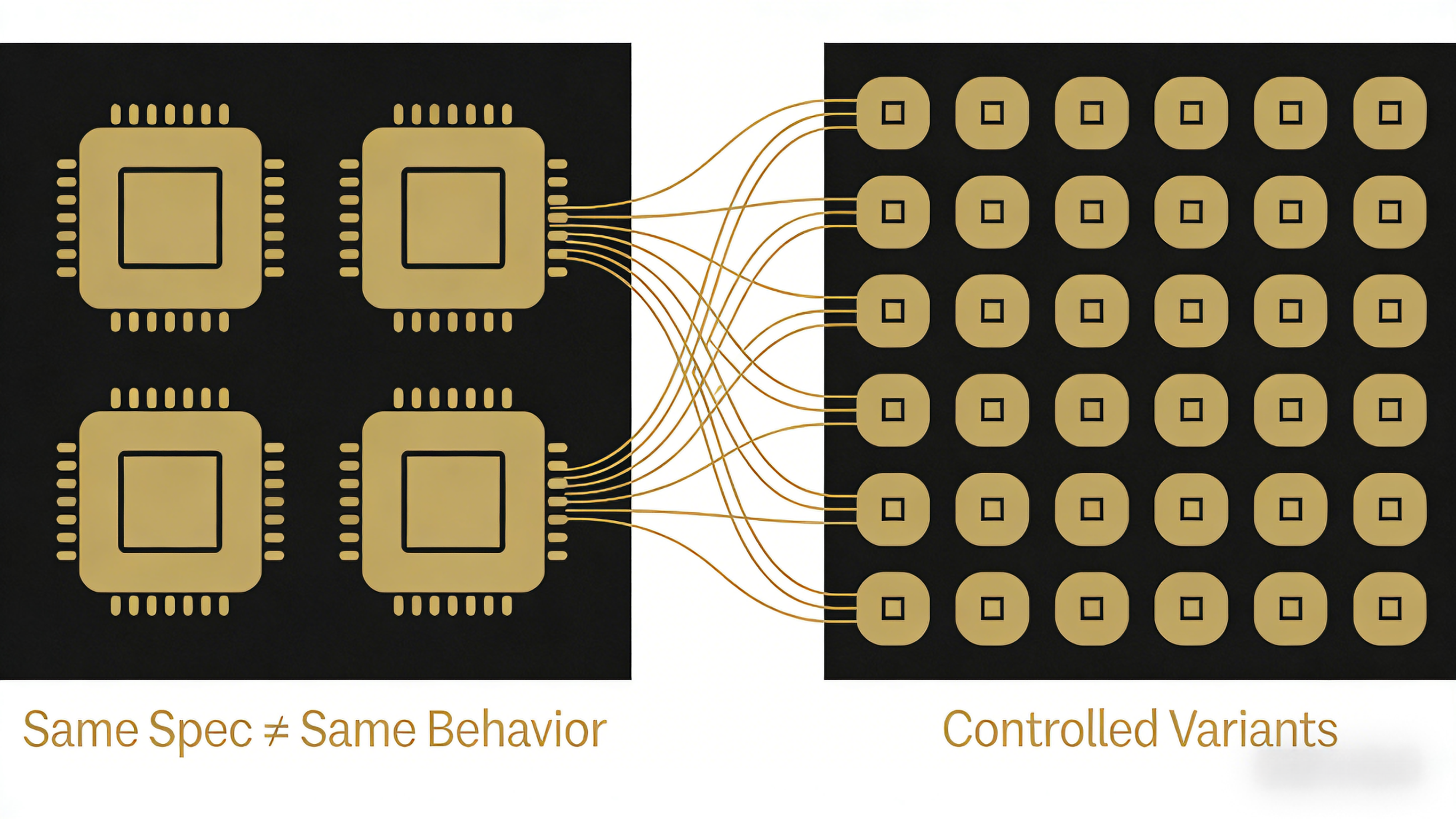

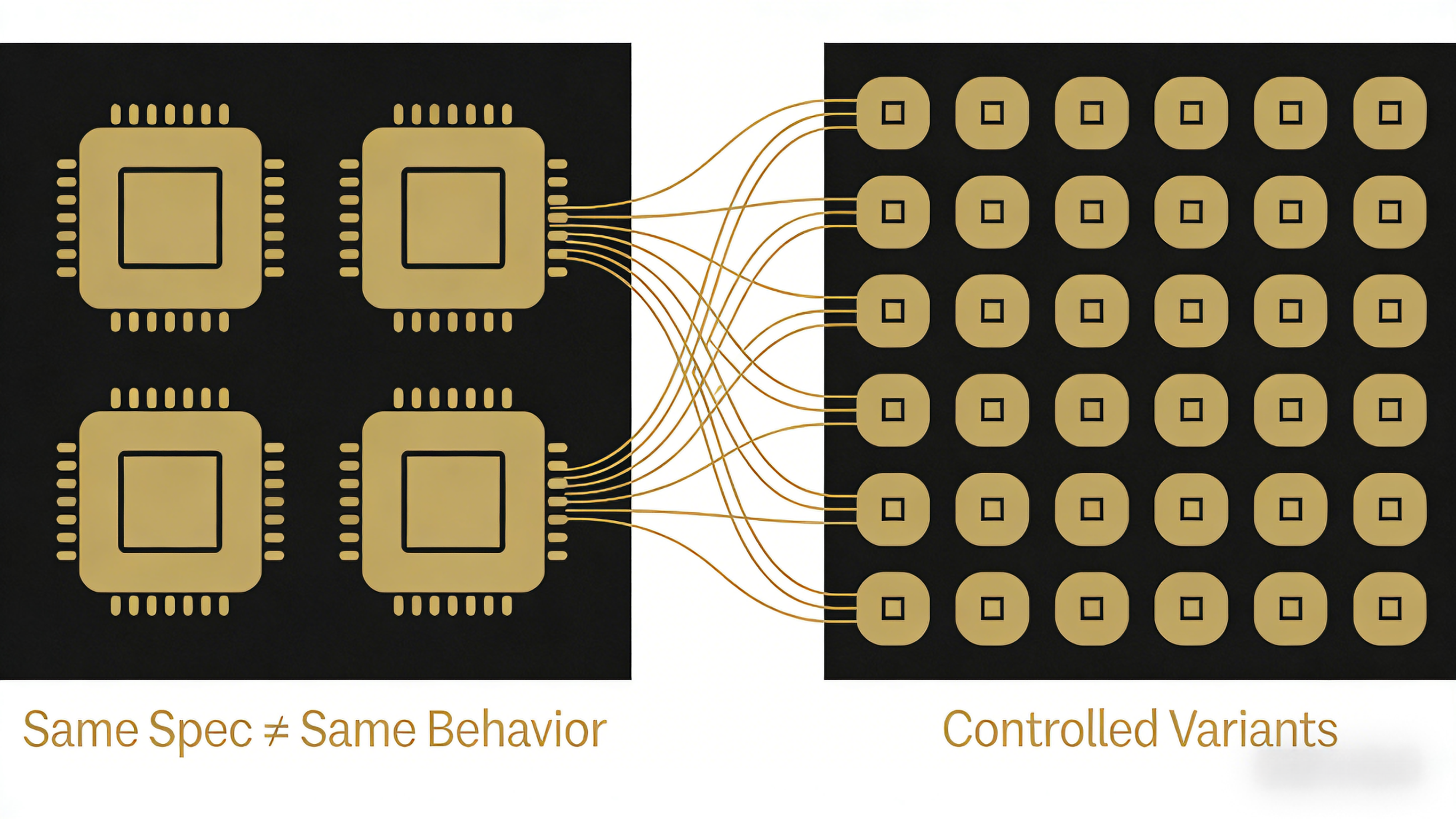

The Illusion of “Same Spec = Same Behavior”

On paper, everything looks identical:

Yet in production, architecture teams encounter:

PCIe devices intermittently disappearing

NIC negotiation behaving differently across nodes

Thermal throttling under identical workloads

Inconsistent power draw between supposedly identical systems

This is not bad luck.

This is component-level non-determinism leaking into system behavior.

Why Architecture-Level Design Alone Is Not Enough

Modern architecture teams focus heavily on:

All of this assumes one thing:

The hardware layer behaves consistently.

When components vary in stepping, firmware defaults, tolerance ranges, or vendor-specific implementations, that assumption collapses.

The result?

More time spent debugging edge cases

Longer validation cycles

Lower confidence in scaling decisions

Architecture teams forced into reactive firefighting

What “Deterministic Components” Actually Mean

Deterministic components are not just compatible components.

They are components that meet four architectural requirements:

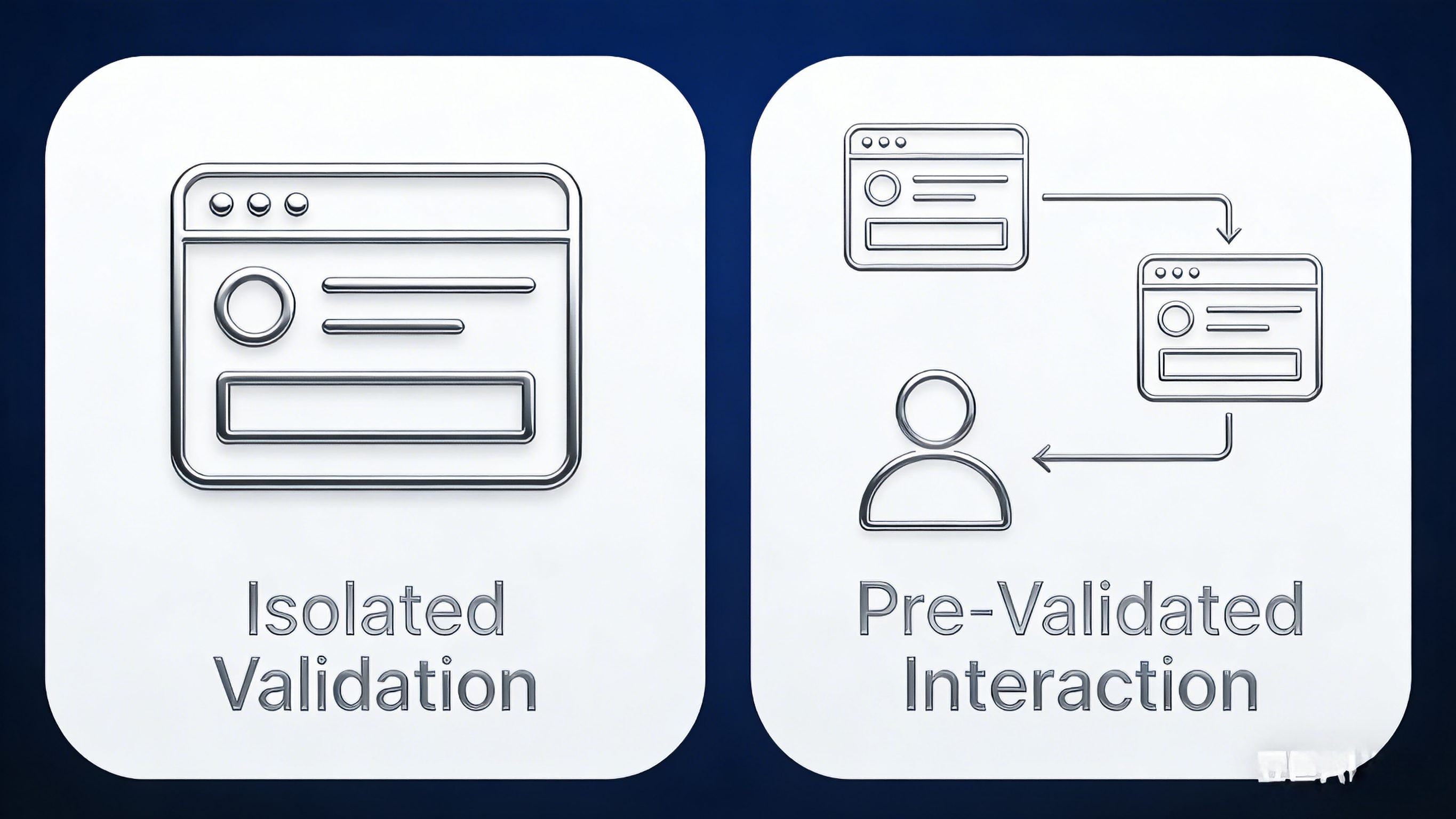

1. Controlled Variants

Every CPU stepping, memory IC revision, NIC controller version, and storage firmware is explicitly defined and tracked.

No silent substitutions.

No “equivalent parts” in production runs.

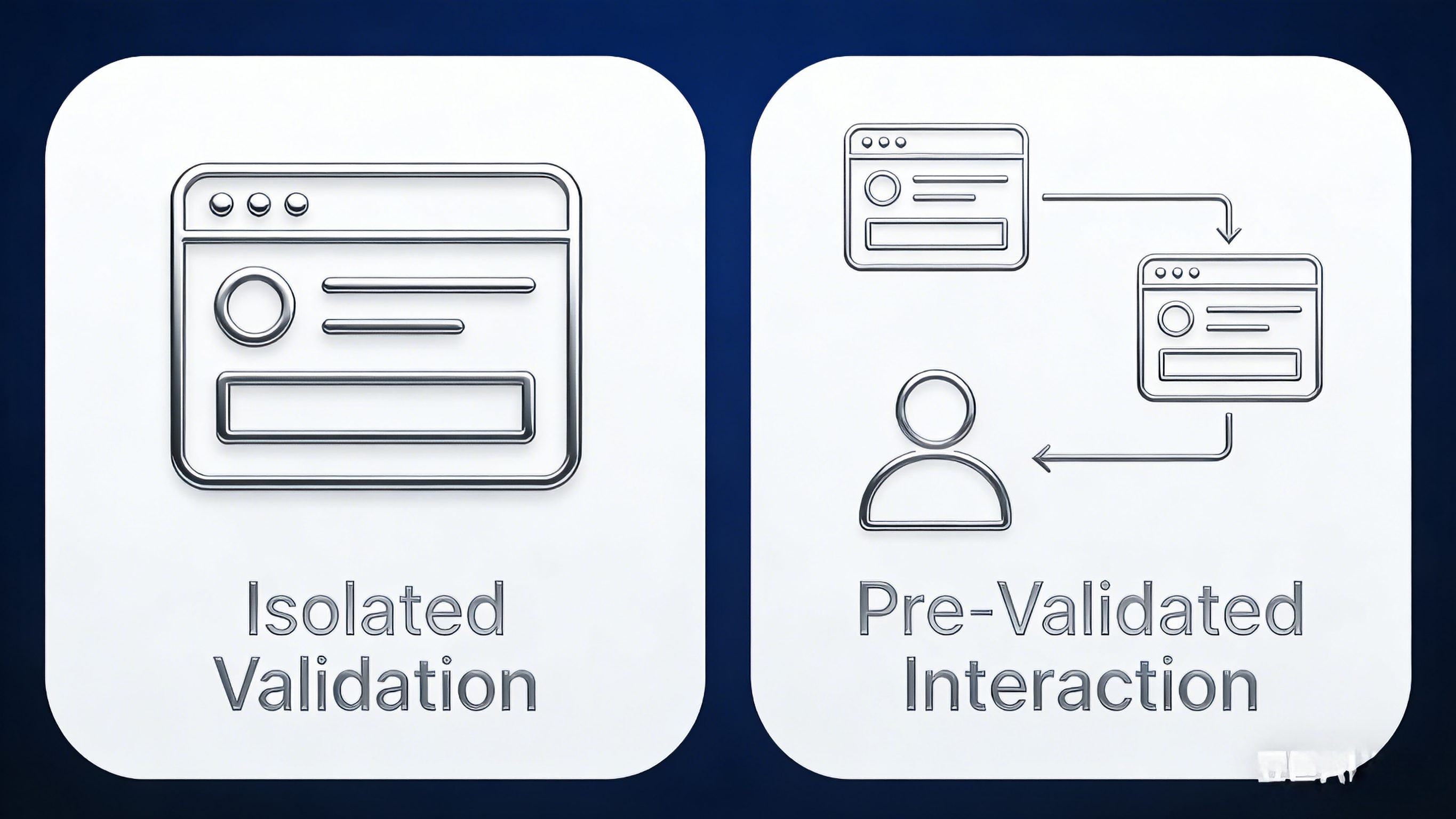

2. Pre-Validated Interaction

Components are not validated in isolation.

They are validated as a system:

CPU ↔ memory training behavior

PCIe lane allocation and bifurcation

Power delivery under sustained load

Thermal response under real workloads

Architecture teams need to know how components interact, not just that they pass individual tests.

3. Stable Defaults, Not Vendor Assumptions

Many failures originate from default BIOS or firmware behaviors that differ across component batches.

Deterministic components come with:

Predictability comes from eliminating ambiguity, not adding more options.

4. Traceability Across the Lifecycle

When an issue appears six months later, architecture teams must be able to answer:

Which component batch was used?

Which firmware baseline was active?

Which configuration profile was deployed?

Without traceability, root-cause analysis becomes guesswork.

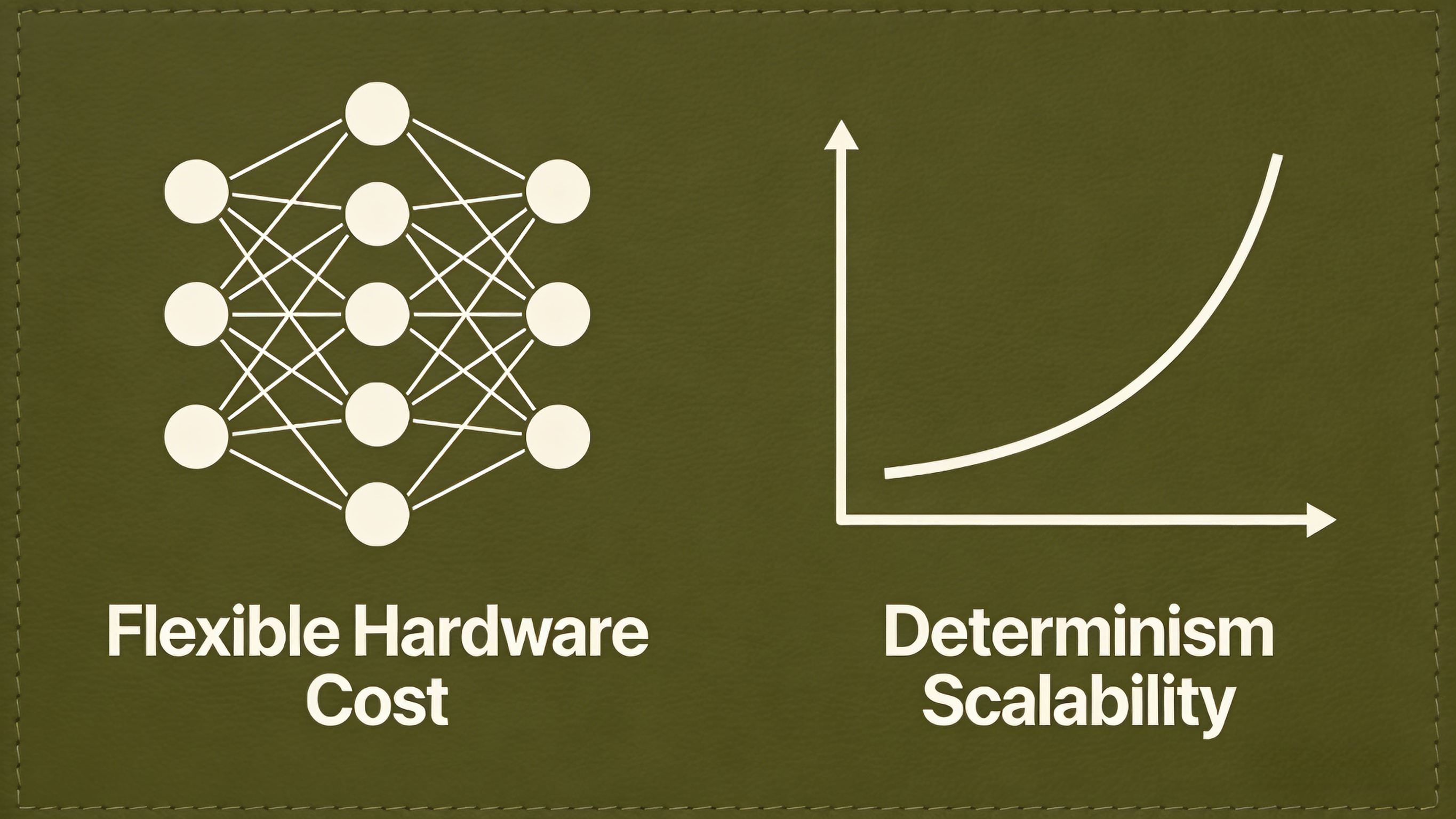

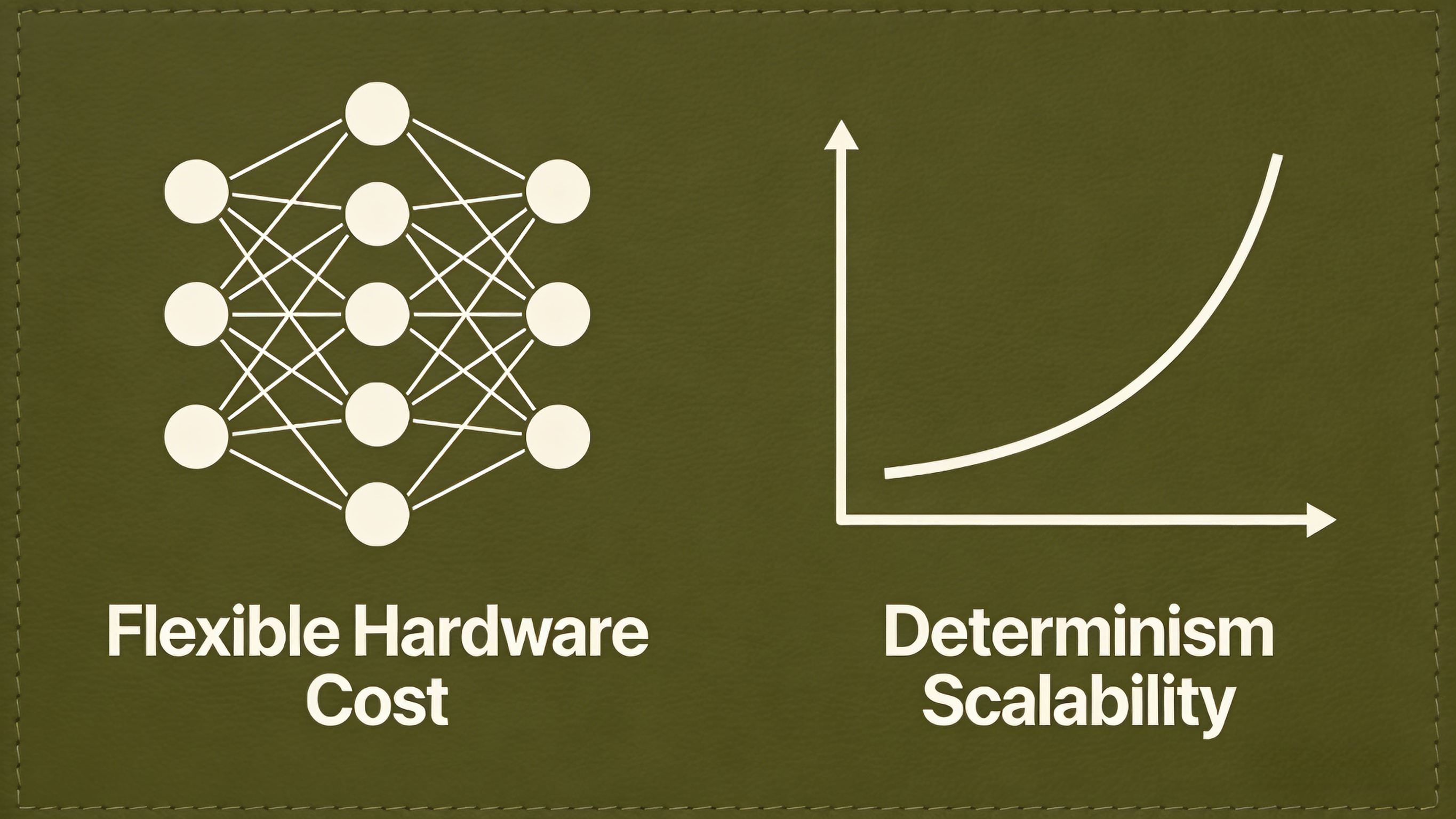

Why Cloud-Scale Teams Reject “Flexible Hardware”

Many OEM customers still believe flexibility is an advantage.

Cloud-scale and hyperscale teams believe the opposite.

They optimize for:

Fewer SKUs

Fewer configurations

Fewer exceptions

Because every additional variable multiplies validation cost.

From an architecture perspective:

Flexibility is expensive. Determinism is scalable.

From Components to Architecture Confidence

When deterministic components are enforced, architecture teams gain:

Predictable performance envelopes

Faster validation and rollout cycles

Fewer production surprises

Higher confidence in capacity planning

Most importantly, they can shift focus back to architecture design, instead of hardware debugging.

Final Thought

Deterministic hardware is not achieved by better diagrams or more automation alone.

It starts with a simple but disciplined principle:

If components are not deterministic, systems never will be.

For architecture teams designing platforms meant to scale,

component discipline is not a manufacturing concern —

it is an architectural responsibility.